In part 1 of this series, we looked at protecting our AWS environment from external access, minimising our use of static credentials and enforcing least privilege across our roles, users and policies. Now that we’ve secured our environment from an access perspective, we can work on securing our software pipelines against attack.

Continuous Integration and Continuous Delivery (CI/CD) is a common approach to software development and delivery, but it’s also a prime area for risks, and because it’s complex to set-up, it’s easy to make concessions that we forget about, and make our supply chain vulnerable.

So, let’s discuss some strategies for securing our pipelines from attack.

Scanning External Dependencies

One of the biggest strategies attackers use to poison supply chains is to poison dependencies. A lot of our code, especially if it’s written in a popular language like Python or JavaScript might be relying on many dependencies, and those dependencies have dependencies of their own. So, how do we know that the dependencies we depend on haven’t been compromised?

Fortunately, scanning your dependencies is easy. I use Python, so that’s what I’ll be using as an example here but you can either use a lot of this tooling with other ecosystems or find other open source tooling that supports your ecosystem of choice.

Introducing GuardDog

Datadog, a company you’ve most likely already heard of, developed a package scanning tool called GuardDog. GuardDog looks for signs a package is compromised, and while it won’t have a 100% detection rate, it’s a good tool to proactively protect yourself against currently unknown compromised packages.

GuardDog will pick up on commons signs of package compromise such as code obfuscation, typo-squatting, compromised email domains and downloading executables, this is great but means safe packages could be identified as malicious. So, it’s still important for you to check packages if they are flagged as malicious, to ensure they are safe.

Using GuardDog is very simple, you can just add the following steps to your CI Pipeline:

# Add to your pre-build actions:

python3 -m pip install guarddog

guarddog pypi verify ./requirements.txt # path to your requirements file.You can also output the results in various formats, allowing you to visualise the findings in a system using Junit or something along those lines.

Introducing pip-audit

Unlike GuardDog, pip-audit isn’t a tool for discovering malicious pip packages, more so identifying vulnerable dependencies. When we’re developing software, it’s likely we’ve locked some of our dependencies and forgot about them, pip-audit is a helpful tool for discovering vulnerable dependencies we might not know about, so we can resolve them.

Using pip-audit is also very easy, you can just add the following steps to your CI Pipeline:

# Add to your pre-build actions:

python3 -m pip install pip-audit

pip-audit -r ./requirements.txtWith pip-audit in specific, I recommend configuring the steps to run as part of your pre-merge and pre-deploy pipelines but also on a scheduled basis to scan for any newly vulnerable packages that you may not otherwise get alerted to.

Protecting Internal IP From Compromise

While scanning external dependencies is certainly an important step, we need to protect our own packages too so that there is less risk for an attacker to poison our code or software delivery process.

There are a few ways we can do this:

- Scan our internal packages just like our external dependencies (as described above)

- Perform additional scans on our internal packages for sensitive data or secret leakage, such as exposed credentials

- Use secure and internal-only artifact repositories to store and pull your packages

Scanning for sensitive data or secret leakage

Thanks to modern authentication mechanisms like OIDC, secret leakage is less common these days, but it’s still possible, especially when you’re debugging. There are a couple of good options you can use to look for sensitive data in your internal repositories:

GitLeaks is a good option for detecting credential patterns that could indicate Access Keys or API keys are present in your code, whereas Bridgecrew is a more robust solution, although, it’s paid.

Pre-commit

Using pre-commit is also recommended. Pre-commit can be installed on your local system and install hooks that run before changes are committed when you run a git commit, the benefit of this is that you can run tools such as GitLeaks on your local device, before you commit, so that you can identify secret leakage prior to pushing the changes to your git repository.

GitLeaks has a pre-commit hook, which you can find here.

Accessing packages internally

If possible, use an internal artifact repository that you can use to download your own internal packages, as well as packages from other repositories such as NPM or Pypi. AWS CodeArtifact is a good option.

The benefit of using a repository like CodeArtifact is it lets you organise, upload and version your packages in a place you control. You control the authentication and who has access to your code, and who can upload code, this makes it more difficult for an attacker to get access to your packages and even harder to push a malicious package up to it.

There is an AWS blog article on using CodeArtifact in your CI/CD pipelines.

Scanning our code for vulnerabilities

It’s important to scan our code for any misconfigurations that might lead to vulnerabilities when we deploy. There are many tools that support this, so I won’t dive too deep into it. A good option is Snyk, which can do most of the functions in this article all from one tool.

Tying it all together

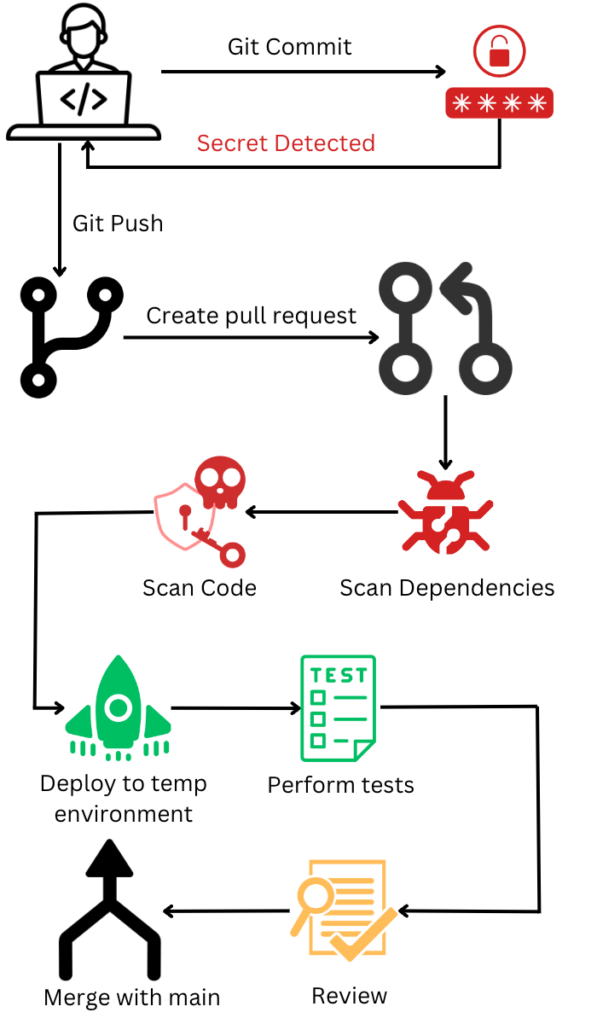

Now, your development workflow should look like so:

You’ll now have a secure CI pipeline that can check your software for vulnerabilities and compromise. In addition to locking down AWS access, having a secure CI pipeline and secure artifact repository to store and manage your packages, makes it much harder for attackers to poison your software supply chain.

Final Notes

You may be wondering if there’s anything else we could do to improve the security of our supply-chain. There is always more we can do, using a Landing Zone solution like the AWS Landing Zone Accelerator will be helpful to ensure your environment is configured according to best practice and you have maximum security observability.

I hope you found this guide helpful.

Leave a Reply