Over the weekend I built a discord bot, this is a quick post on how to set-up the AI-enabled cog. Some backstory – I run a discord server with some mates, and we occasionally share some wizard memes, like the one below:

The Plan

What if, instead of creating a meme for every spell we want to cast, they can use a simple discord command, name a spell, and a spell is generated for them?

Sounds good! Let’s build it.

What we’ll need to do

- Create a discord bot (we’ll use nextcord for this).

- An API we can call send a prompt to, to generate our AI response.

- Write a nextcord cog to cast wizard spells

What we’ll need to do it

- A bit of Python

- A touch of Ollama

- A discord bot token

- Mixed together in some Docker Compose

Oh, and if you want to follow along exactly, you’ll need to be running Rocky Linux 9 and have an Nvidia GPU installed with at least 4GB of VRAM, and you’ll also need the Nvidia Container Toolkit installed.

Building the Bot

Let’s start by building out a simple nextcord bot, once this is done, we can create a cog for our bot functionality.

Before we can start, we’ll need to install the following python packages:

- nextcord

- langchain-community

Now that’s done, let’s create our cog:

#/bot.py

import nextcord

from nextcord.ext import commands

from cogs.data.environment import API_TOKEN

bot = commands.Bot()

cogs = [

'fun'

]

def load_cogs(cogs: list):

for cog in cogs:

bot.load_extensions(f'cogs.{cog}')

if __main__ == '__main__':

load_cogs(cogs)

bot.run(API_TOKEN)Even though we’ve got our core bot, the code that casts our spells doesn’t exist yet. So, let’s build that.

Building the Cog

Create a new folder called /cogs and another folder under it called /data. Now, let’s write some code:

#/cogs/fun.py

import nextcord, random

from nextcord.ext import commands

from langchain_community.llms import Ollama

from .data.messages import cast_spell

from .data.environment import OLLAMA_URL, SERVER_ID

# Our cog class

class Fun(commands.Cog):

def __init__(self, bot):

self.bot = bot

self.model = Ollama(

model = 'llama2-uncensored',

base_url = OLLAMA_URL

)

# Creating the /cast command

@nextcord.slash_command(name="cast", description="Cast a spell against your target", guild_ids=[SERVER_ID])

async def cast(self, interaction: nextcord.Interaction, spell: str, target: str):

# this means our commands won't time out,

await interaction.response.defer()

successful = random.choice([True, False])

if successful:

response = self.model.invoke(

f"You have cast the {spell} against {target}, describe it's effects"

)

else:

response = self.model.invoke(

f"You have cast the {spell} against {target}, and it failed, meaning there are no effects."

)

await interaction.followup.send(embed=cast_spell(spell, target, response, successful))

def setup(bot):

bot.add_cog(SpellCaster(bot))Defining some data

Create a couple of files under /cogs/data. Create an environment.py file, a images.py file and a messages.py file.

# /cogs/data/environment.py

import os

API_TOKEN = os.getenv("API_TOKEN")

SERVER_ID = int(os.getenv("SERVER_ID"))

OLLAMA_URL = os.getenv("OLLAMA_URL")# /cogs/data/images.py

spell_images = [

"https://i.imgur.com/hXPMnvz.png",

"https://i.imgur.com/TzBTp0U.png",

"https://i.imgur.com/mg3pS7M.png",

"https://i.imgur.com/M9q7uCC.png"

]

failed_spell_images = [

"https://i.imgur.com/NO4Nhll.png",

"https://i.imgur.com/SuS9gN5.png",

"https://i.imgur.com/TUx0VYT.png",

"https://i.imgur.com/nKdbcwV.png"

]# /cogs/data/messages.py

import random

from nextcord import Embed, Color

from .images import spell_images, failed_spell_images

def cast_spell(spell, target, response, success):

if success:

embed = Embed(

title = f"I CAST {spell.upper()}",

description = f"Spell **SUCCEEDED** cast against {target}\n\n*{response}*",

color=Color.green()

)

embed.set_image(url=spell_gifs[random.randint(0, len(spell_gifs) - 1)])

else:

embed = Embed(

title = f"I CAST {spell.upper()}",

description = f"Spell cast **FAILED** against {target}\n\n*{response}*",

color=Color.red()

)

embed.set_image(url=failed_spell_gifs[random.randint(0, len(failed_spell_gifs) - 1)])

return embedNow we’ve written our core bot, let’s deploy it.

Deploying Our Bot

Let’s use Docker Compose to deploy our bot

Creating our Dockerfile

Create a new file called Dockerfile, this is how we’ll build our container.

# /Dockerfile

FROM python:3.10-slim

WORKDIR /bot

COPY ./bot .

RUN pip install --upgrade pip

RUN pip install -r requirements.txt

CMD ["python", "bot.py"]Creating our compose file

Now, we need to create a compose.yaml file, which will create our docker container services.

# /compose.yaml

services:

bot:

build:

context: .

volumes:

- ./bot:/bot

environment:

- AUDIT_CHANNEL_ID=${AUDIT_CHANNEL_ID}

- API_TOKEN=${API_TOKEN}

- SERVER_ID=${SERVER_ID}

- OLLAMA_URL=http://ollama:11434

depends_on:

- ollama

restart: unless-stopped

ollama:

image: ollama/ollama:latest

volumes:

- ./ollama:/root/.ollama

- ./ollama.sh/:/ollama.sh

runtime: nvidia

environment:

- NVIDIA_VISIBLE_DEVICES=all

- NVIDIA_DRIVER_CAPABILITIES=compute,utility

entrypoint: ['/usr/bin/bash', '/ollama.sh']Create a helper script

Let’s create a ollama.sh script that we’ll use to bootstrap our ollama container.

# /ollama.sh

#!/bin/bash

pid=$!

ollama pull llama2-uncensored:latest

wait $pidCreating our env file

Now, let’s create another file called .env, this will hold our environment variables that will be passed to our containers.

# /.env

API_TOKEN=my-discord-bot-token

SERVER_ID=my-discord-server-idBuilding and running our containers

Let’s run the commands now:

docker compose up --build --detachThis will build the service and you should see your bot in your discord server (the llama2-uncensored model can take a while to pull down – but this will only need to be done once).

Trying Out The Bot

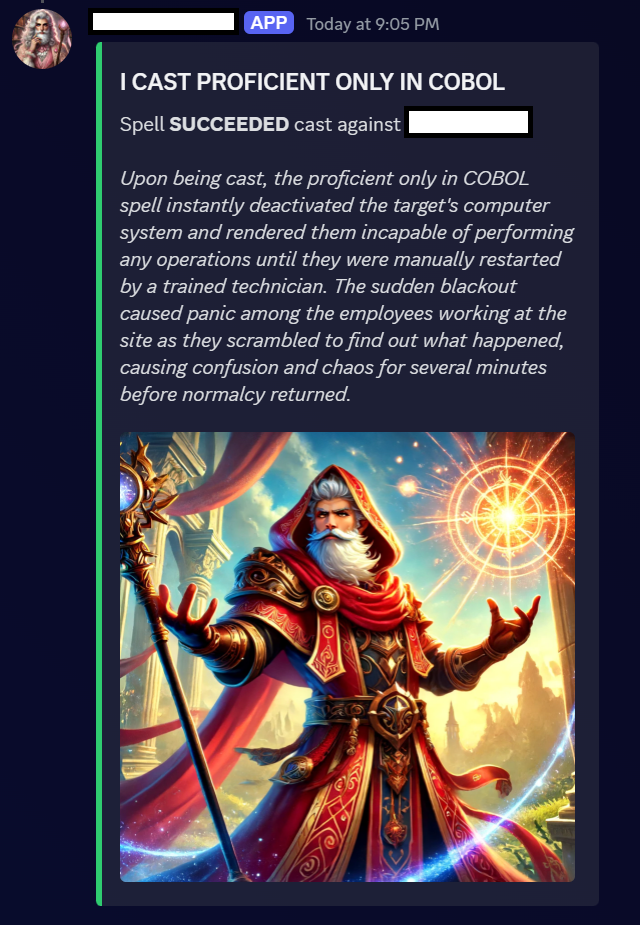

Fortunately for me, someone cast a spell on me using the bot, so I was able to test it out.

Look at that! That’s awesome!

Now, instead of creating a meme for every spell we want to send, we can make it more engaging by using a command and letting llama2 describe our spell. To top it off, each spell cast has a 50/50 chance of succeeding.

Final Notes

You can checkout my GitHub for the code to the bot. I hope you found this silly little journey funny.

Leave a Reply